In a current research revealed within the journal PLOS Digital Well being, researchers assessed and in contrast the medical information and diagnostic reasoning capabilities of huge language fashions (LLMs) with these of human consultants within the discipline of ophthalmology.

Research: Massive language fashions method expert-level medical information and reasoning in ophthalmology: A head-to-head cross-sectional research. Picture Credit score: ozrimoz / Shutterstock

Research: Massive language fashions method expert-level medical information and reasoning in ophthalmology: A head-to-head cross-sectional research. Picture Credit score: ozrimoz / Shutterstock

Background

Generative Pre-trained Transformers (GPTs), GPT-3.5 and GPT-4, are superior language fashions educated on huge internet-based datasets. They energy ChatGPT, a conversational synthetic intelligence (AI) notable for its medical utility success. Regardless of earlier fashions struggling in specialised medical checks, GPT-4 exhibits vital developments. Issues persist about information ‘contamination’ and the medical relevance of take a look at scores. Additional analysis is required to validate language fashions’ medical applicability and security in real-world medical settings and tackle present limitations of their specialised information and reasoning capabilities.

Concerning the research

Questions for the Fellowship of the Royal School of Ophthalmologists (FRCOphth) Half 2 examination have been extracted from a specialised textbook that isn’t broadly out there on-line, minimizing the chance of those questions showing within the coaching information of LLMs. A complete of 360 multiple-choice questions spanning six chapters have been extracted, and a set of 90 questions was remoted for a mock examination used to match the efficiency of LLMs and medical doctors. Two researchers aligned these questions with the classes specified by the Royal School of Ophthalmologists, and so they categorised every query in keeping with Bloom’s taxonomy ranges of cognitive processes. Questions with non-text parts that have been unsuitable for LLM enter have been excluded.

The examination questions have been enter into variations of ChatGPT (GPT-3.5 and GPT-4) to gather responses, repeating the method as much as 3 times per query the place crucial. As soon as different fashions like Bard and HuggingChat grew to become out there, related testing was performed. The proper solutions, as outlined by the textbook, have been famous for comparability.

5 skilled ophthalmologists, three ophthalmology trainees, and two generalist junior medical doctors independently accomplished the mock examination to judge the fashions’ sensible applicability. Their solutions have been then in contrast in opposition to the LLMs’ responses. Submit-exam, these ophthalmologists assessed the LLMs’ solutions utilizing a Likert scale to price accuracy and relevance, blind to which mannequin offered which reply.

This research’s statistical design was strong sufficient to detect vital efficiency variations between LLMs and human medical doctors, aiming to check the null speculation that each would carry out equally. Varied statistical checks, together with chi-squared and paired t-tests, have been utilized to match efficiency and assess the consistency and reliability of LLM responses in opposition to human solutions.

Research outcomes

Out of 360 questions contained within the textbook for the FRCOphth Half 2 examination, 347 have been chosen to be used, together with 87 from the mock examination chapter. The exclusions primarily concerned questions with pictures or tables, which have been unsuitable for enter into LLM interfaces.

Efficiency comparisons revealed that GPT-4 considerably outperformed GPT-3.5, with an accurate reply price of 61.7% in comparison with 48.41%. This development in GPT-4’s capabilities was constant throughout several types of questions and topics, as outlined by the Royal School of Ophthalmologists. Detailed outcomes and statistical analyses additional confirmed the strong efficiency of GPT-4, making it a aggressive software even amongst different LLMs and human medical doctors, notably junior medical doctors and trainees.

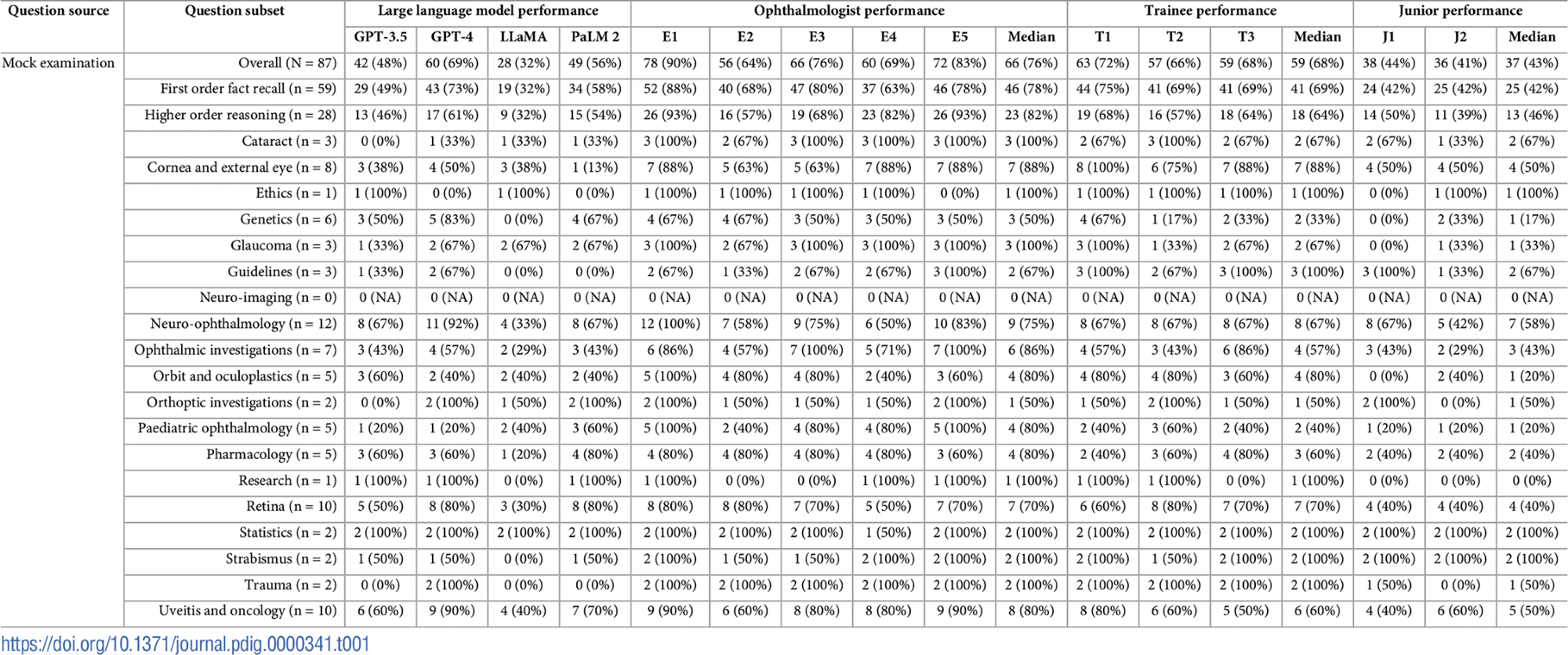

Examination traits and granular efficiency information. Query topic and sort distributions introduced alongside scores attained by LLMs (GPT-3.5, GPT-4, LLaMA, and PaLM 2), skilled ophthalmologists (E1-E5), ophthalmology trainees (T1-T3), and unspecialised junior medical doctors (J1-J2). Median scores don’t essentially sum to the general median rating, as fractional scores are not possible.

Examination traits and granular efficiency information. Query topic and sort distributions introduced alongside scores attained by LLMs (GPT-3.5, GPT-4, LLaMA, and PaLM 2), skilled ophthalmologists (E1-E5), ophthalmology trainees (T1-T3), and unspecialised junior medical doctors (J1-J2). Median scores don’t essentially sum to the general median rating, as fractional scores are not possible.

Within the particularly tailor-made 87-question mock examination, GPT-4 not solely led among the many LLMs but in addition scored comparably to skilled ophthalmologists and considerably higher than junior and trainee medical doctors. The efficiency throughout totally different participant teams illustrated that whereas the skilled ophthalmologists maintained the very best accuracy, the trainees approached these ranges, far outpacing the junior medical doctors not specialised in ophthalmology.

Statistical checks additionally highlighted that the settlement between the solutions offered by totally different LLMs and human contributors was typically low to average, indicating variability in reasoning and information utility among the many teams. This was notably evident when evaluating the variations in information between the fashions and human medical doctors.

An in depth examination of the mock questions in opposition to actual examination requirements indicated that the mock setup intently mirrored the precise FRCOphth Half 2 Written Examination in issue and construction, as agreed upon by the ophthalmologists concerned. This alignment ensured that the analysis of LLMs and human responses was grounded in a practical and clinically related context.

Furthermore, the qualitative suggestions from the ophthalmologists confirmed a powerful desire for GPT-4 over GPT-3.5, correlating with the quantitative efficiency information. The upper accuracy and relevance rankings for GPT-4 underscored its potential utility in medical settings, notably in ophthalmology.

Lastly, an evaluation of the cases the place all LLMs failed to offer the proper reply didn’t present any constant patterns associated to the complexity or subject material of the questions.